Face Tracking Technology

Using Face Tracking Software Development Kit (SDK), Magic Mirror is able to locate and track human faces in digital images or video, in real time. The input from Kinect camera are analyzed to interpret the results about a tracked face, e.g. the head pose and facial expression.

Bounding Box Data for Face Detection

The face detection algorithm draws up a box around the user’s face and returns bounding box value that contains [x,y,Height,Width] of the face. These coordinates are used to render a tracked user’s face and display it at a preset coordinates [x,y] – behind a prop with a face cutout, just like you are posing behind the physical prop.

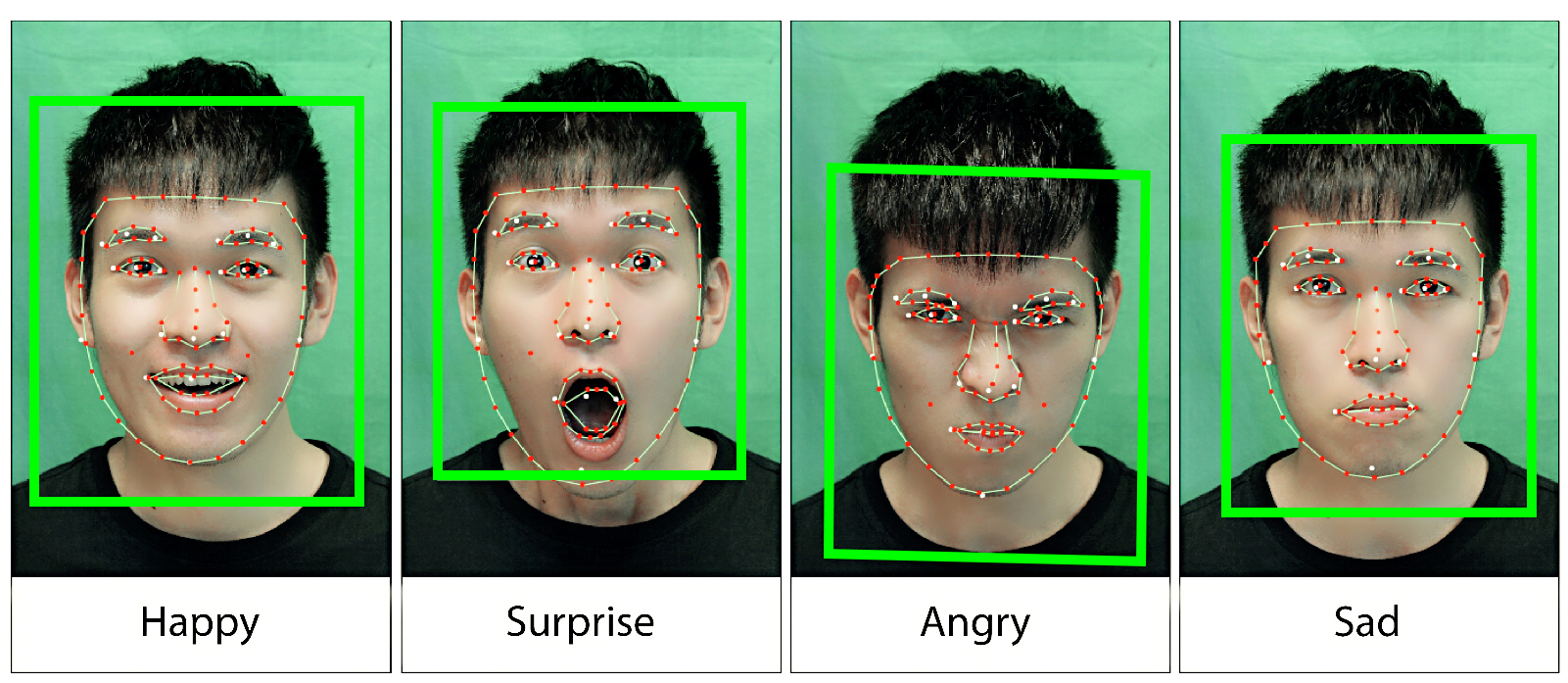

Facial Expression Recognition

After user’s face is detected, the face tracking engine returns an array of 87 2D points, defining the edge along the face, eye brows, eyes, nose and mouth. The changes of coordinates on these 2D points are measured to recognize user’s facial expression of the users. The 6 basic emotions are anger, fear, disgust, happiness, sadness and surprise.

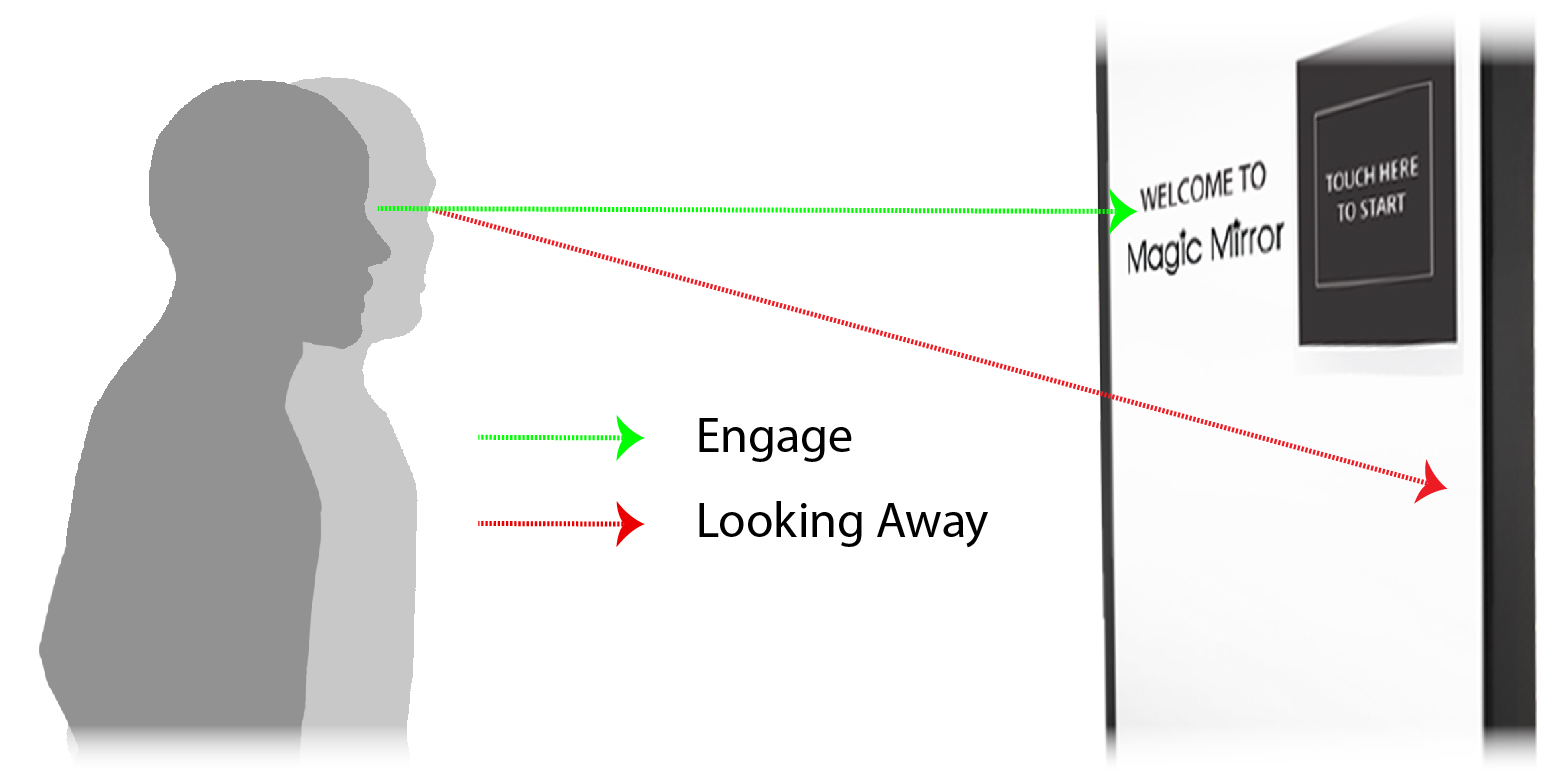

Face Properties to Track User Engagement

Face Tracking SDK returns a read-only map of key or value pairs that provide information about the appearance or state of a user's face – face properties. Combining the results from LookingAway (when the user is looking somewhere else other than the mirror content) and Eye Closed properties, we can determine if the user is engaged, the engagement duration, etc. and record these data into viewing analytics.

Maya – 3D Modeling, Texturing and Rigging

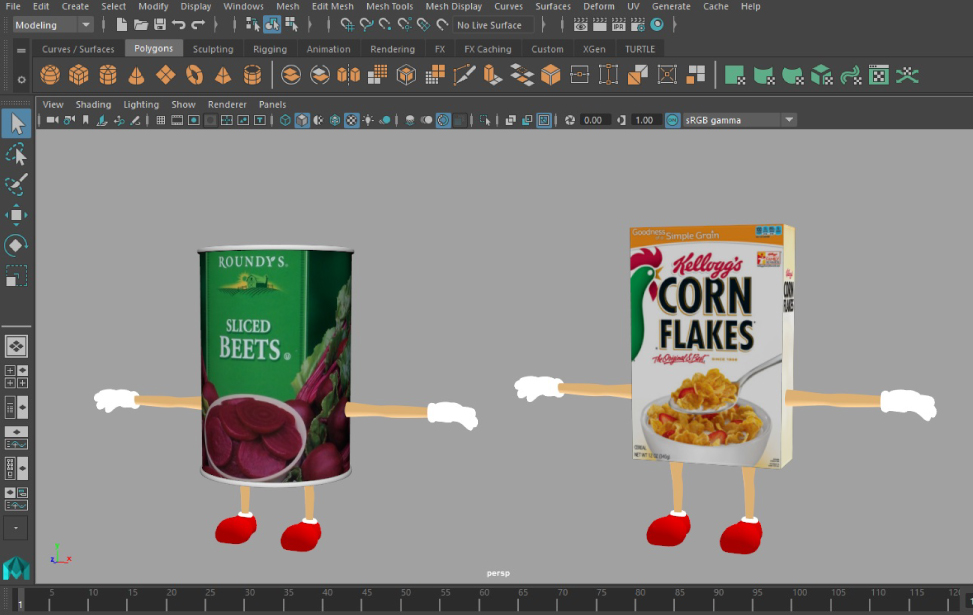

Several techniques such as 3D modeling, texturing and rigging have been applied to generate the 3D photo-realistic models – the key component in 3D virtual dressing, interactive product catalogue, etc. to simulate a real-life shopping experience as if you are browsing the real products in three-dimensional form.

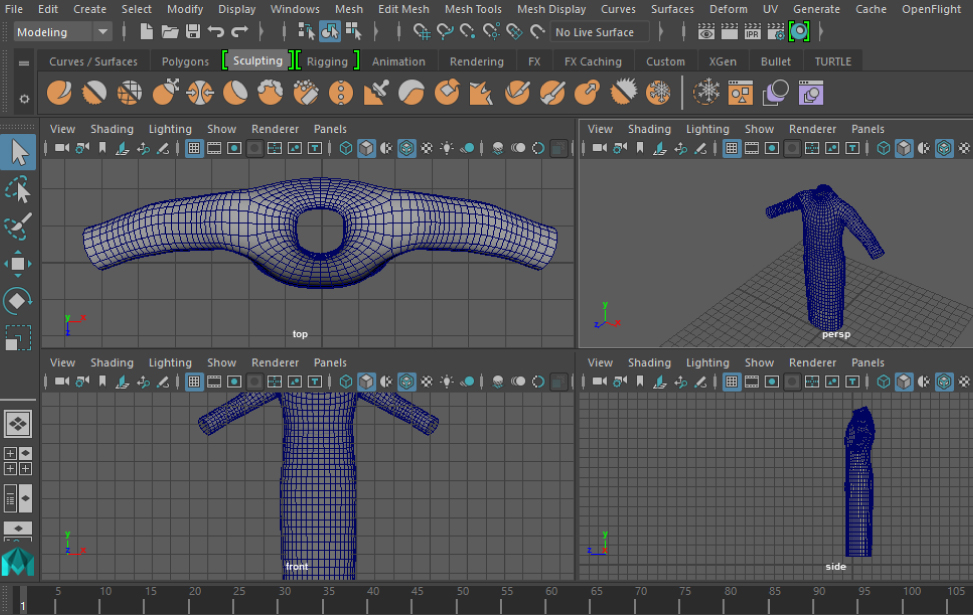

A. 3D Modeling

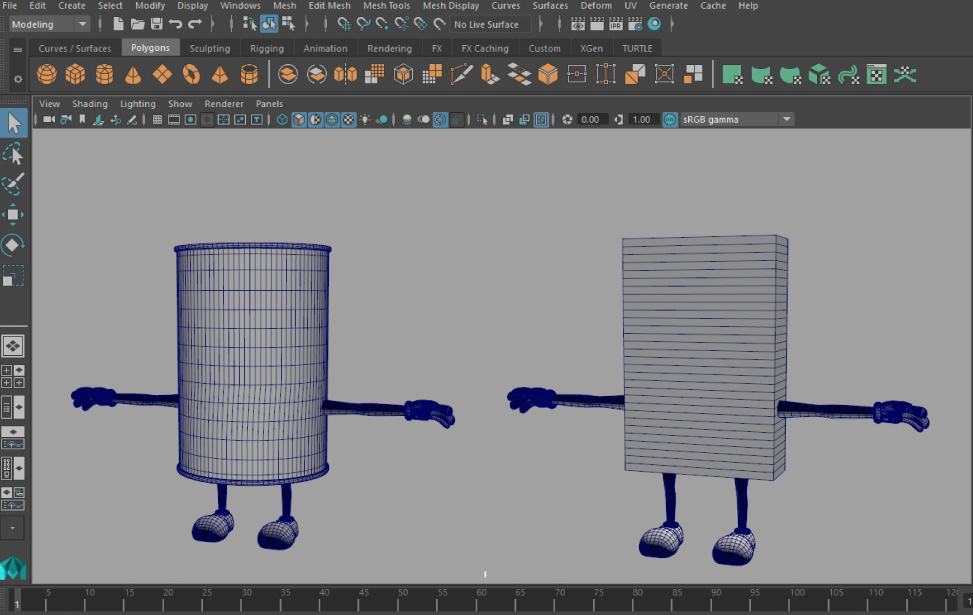

Forming a 3D wireframe model of a solid object such as mannequin, product packaging box, etc. from high resolution 2D images by defining the shape with its characteristics lines and points.

B. Texture Mapping

The texture map – a combination of details, surface textures and color is applied to the 3D model. Pixels from the texture are wrapped and mapped to the surface of 3D polygon to simulate a real 3D photo-realistic product model. For example, applying a Kellogg’s packaging to a plain white box.

C. Rigging

The process of constructing a series of ‘bones’ to prepare the 3D model for movement. A digital skeleton which made up of joints and bones is bounded to the 3D model. Each joint acts as a ‘handle’ where the animator can use to rotate and bend the 3D model into a desired pose to create a set of animation controls.

Image Post-Processing

Image post-processing have been playing a key role in several Magic Mirror apps such as virtual try-on, beauty retouch, etc. to achieve the required photo effects.

Digital Superimposition for Virtual Try-On

Magic Mirror able to dynamically superimpose fashion or non-fashion items onto the photos taken, at different placement depending on what the item is. This is achievable by utilizing the real time image processing functions in OpenCV to detect the position of the shoulders, eyes and head in the photos.

A. Superimpose garment image based on shoulder’s points.

B. Superimpose glasses image based on eyes’ position.

Learn More

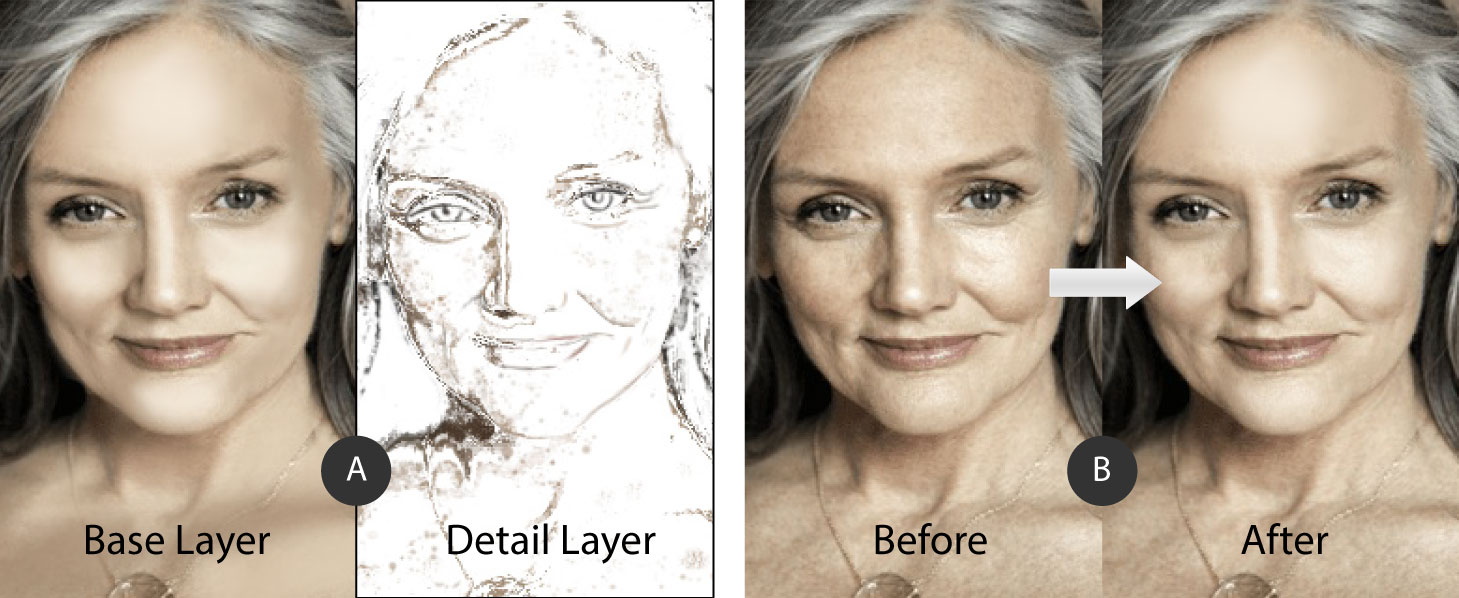

Face Beautification

Magic Mirror has incorporated multiple advanced image enhancement methods such as automatic human face beautification and color enhancement method to to beautify faces. Images captured are separated into layers to enhance image quality without losing harmony.

A. Faces are extracted and separated into two layers: the base layer containing smooth skin pixels and detail layer presenting blemishes (e.g. wrinkles, pores, etc.) on faces.

B. The magnitude of detail can be minimized to smoothen faces and reduce the visibility of blemishes.

Kinect Applications

By using Kinect body sensor, Magic Mirror is able to offer apps such as hair color studio, color-changing outfit, product genie, etc. which are beneficial to the fashion and beauty industries - together with an interactive user experience.

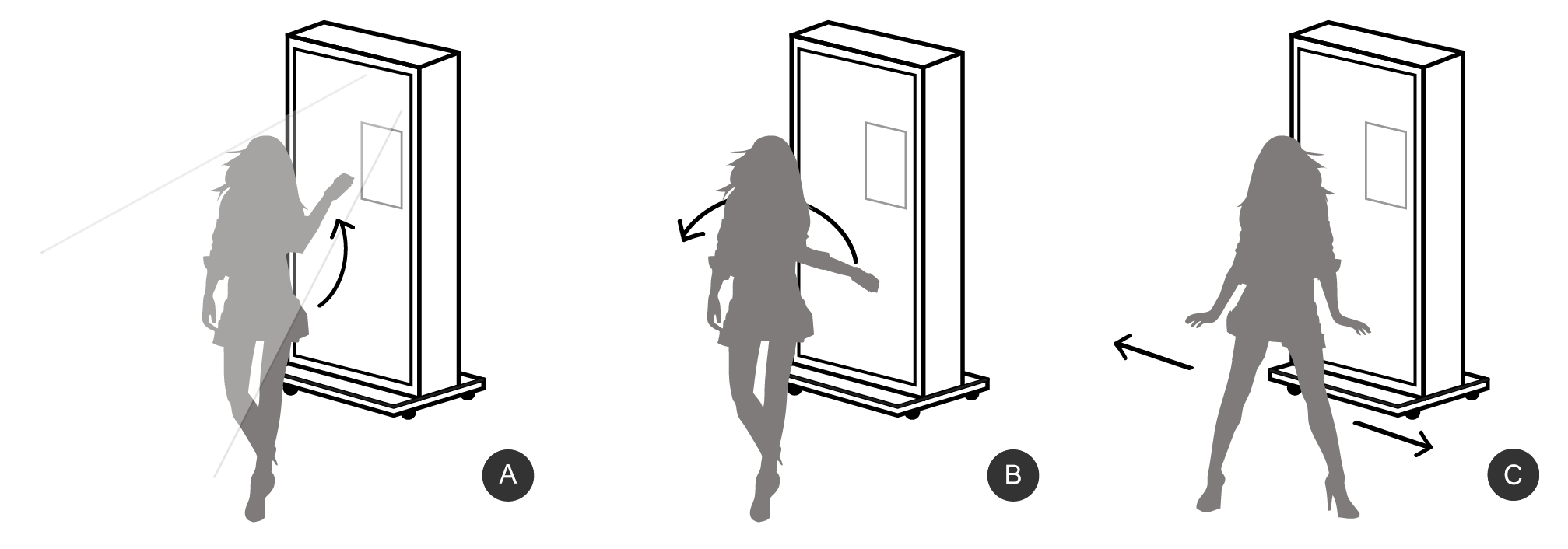

Gesture Recognition

Ability to ‘read’ the body motion of the users, mainly body and hand gestures to perform specific function on the Magic Mirror such as take photo, browse fashion collection, play game, etc. Magic Mirror is able to understand human body language, building a richer and more interactive experience with the users.

A. Raise hand to take photo

B. Swipe to 'try-on' different clothes

C. Move the body to grab the dropping items

Kinect Color Engine

A. Using OpenCV to detect color from the object of interest, Magic Mirror’s product genie is able to recommend matching products based on the color detected from user’s outfit.

B. By extracting the colored object, Magic Mirror can convert it into multiple colors which allows users to visualize their outfit in different colors without the need of changing clothes.

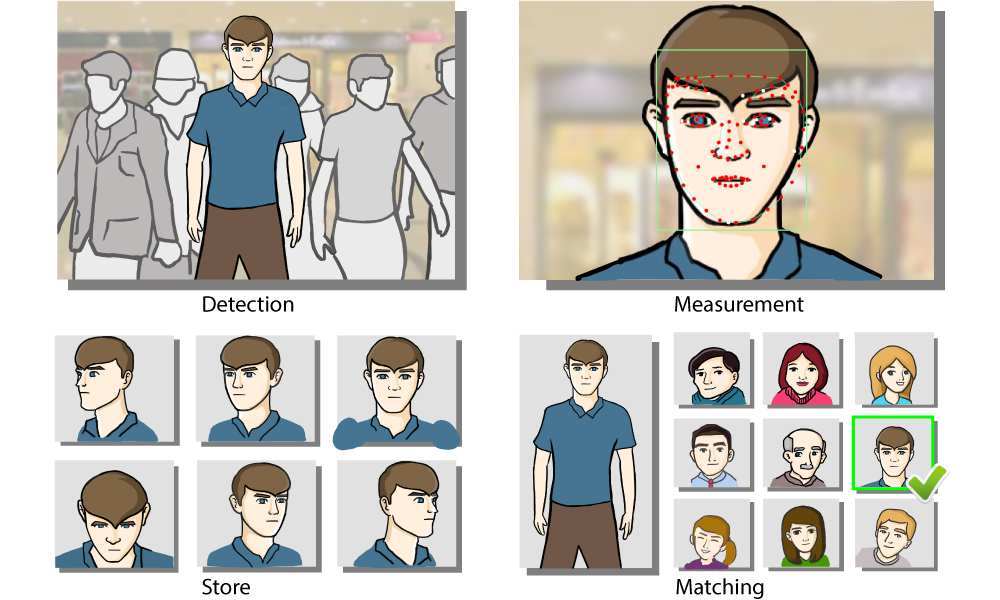

Facial Recognition

Each person’s face consists of a combination of facial features which makes everyone looks different. With facial recognition technology, Magic Mirror is able to identify users based on the facial features collected from the previous sessions.

Identify Faces based on Facial Features

| Detection | : | When users stand in front of Magic Mirror, their faces are extracted from the rest of the scene. |

| Measurement | : | Approximately 80 nodal points on the face are used to measure the facial features, e.g. distance between the eyes, width of the nose, shape of the cheekbones, length of the jaw line, etc. |

| Store | : | Information about the user’s facial features are stored in the facial database. |

| Matching | : | By analyzing and comparing the patterns to a database of facial data, Magic Mirror is able to recognize the returning user and display details or images which are relevant to that specific user. |

Background Subtraction

Using Kinect to identify object of interest, Magic Mirror creates high quality background removal with or without green screen backdrop using available technologies.

Chroma Key

Chroma key, the post processing technique has been incorporated into Magic Mirror to separate the foreground object from green screen background and combine it with the selected image or video stream (as the background) to create an image with custom background.

GrabCut Algorithm

Using Kinect to identify object of interest, Magic Mirror employs the GrabCut algorithm – an interactive foreground extraction using iterated graph cuts – to extract foreground object accurately from the complex background.

How it works:

A. Draw a rectangle around the foreground object

B. Using iterated graph cuts algorithm for image segmentation to define edges

C. User corrections with foreground brush (white) and a background brush (green) on the faulty results

D. The final result with high quality background removal

Touch Screen

Providing accurate touch position to ‘interact with Magic Mirror’ such as entering email to share photos, etc.

Projected Capacitive Touch (PCT) Technology

Touch sensor embedded an array of micro-fine conductive electrodes printed in X and Y axis behind a protective touch surface, enables touch position to be detected over a thick overlay. While user’s finger approaches the touch surface, the peak change in the electrode frequency determines the touch position accurately.

A. PCT technology eliminates the needs of mirror cut out for direct touch on the touchscreen. B. Senses finger position on a sensor from the electric field projected through the mirror.

-technology.png)